Waiting for Postgres 18: Accelerating Disk Reads with Asynchronous I/O

With the Postgres 18 Beta 1 release this week, a multi-year effort and significant architectural shift in Postgres is taking shape: Asynchronous I/O (AIO). These capabilities are still under active development, but they represent a fundamental change in how Postgres handles I/O, offering the potential for significant performance gains, particularly in cloud environments where latency is often the bottleneck.

While some features may still be adjusted or dropped during the beta period before the final release, now is the best time to test and validate how Postgres 18 performs in practice. In Postgres 18 AIO is limited to read operations; writes remain synchronous, though support may expand in future versions.

In this post, we explain what asynchronous I/O is, how it works in Postgres 18, and what it means for performance optimization.

Why asynchronous I/O matters

Postgres has historically operated under a synchronous I/O model, meaning every read request is a blocking system call. The database must pause and wait for the operating system to return the data before continuing. This design introduces unnecessary waits on I/O, especially in cloud environments where storage is often network-attached (e.g. Amazon EBS) and I/O can have over 1ms of latency.

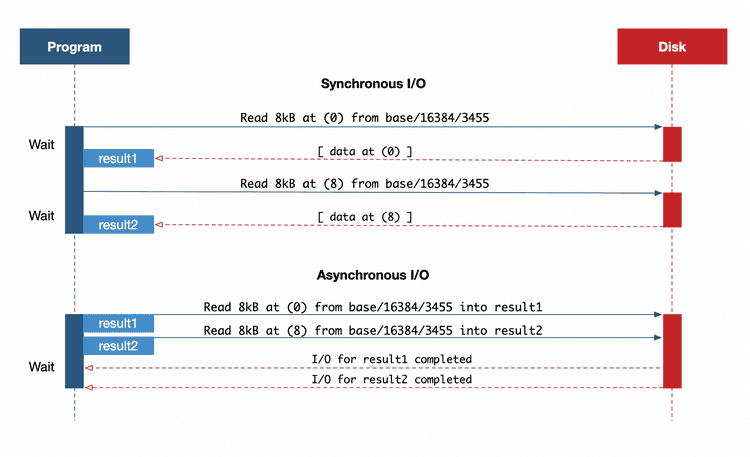

In a simplified model, we can illustrate the difference like this, ignoring any prefetching/batching the Linux kernel might do:

You can picture synchronous I/O like an imaginary librarian who retrieves one book at a time, returning before fetching the next. This inefficiency compounds as the number of physical reads for a logical operation increases.

Asynchronous I/O eliminates that bottleneck by allowing programs to issue multiple read requests concurrently, without waiting for prior reads to return. In an async program flow, I/O requests are scheduled to be read into a memory location and the program waits for completion of those reads, instead of issuing each read individually.

How Postgres 17’s read streams paved the way

The work for implementing asynchronous I/O in Postgres has been many years in the making. Postgres 17 introduced an essential internal abstraction, with the introduction of read stream APIs. These internal changes standardized how read operations were issued across different subsystems and streamlined the use of posix_fadvise() to request that the operating system prefetch data in advance.

However, this advisory mechanism only hinted to the kernel to load data into the OS page cache, not into Postgres’ own shared buffers. Postgres still had to issue syscalls for each read, and OS readahead behaviour is not always consistent.

The upcoming Postgres 18 release removes this indirection. With true asynchronous reads, data is fetched directly into shared buffers by the database itself, bypassing reliance on kernel-level heuristics and enabling more predictable, higher-throughput I/O behavior.

New io_method setting in Postgres 18

To control the mechanism used for asynchronous I/O, Postgres 18 introduces a new configuration parameter: io_method. This setting determines how read operations are dispatched under the hood, and whether they’re handled synchronously, offloaded to I/O workers, or submitted directly to the kernel via io_uring.

The io_method setting must be set in postgresql.conf and cannot be changed without restarting. It controls which I/O implementation Postgres will use and is essential to understand when tuning I/O performance in Postgres 18. There are three possible settings for io_method, with the current default (as of Beta 1) being worker.

io_method = sync

The sync setting in Postgres 18 mirrors the synchronous behavior as was implemented in Postgres 17. Reads are still synchronous and blocking, using posix_fadvise() to achieve read-ahead in the Linux kernel.

io_method = worker

The worker setting utilizes dedicated I/O worker processes running in the background that retrieve data independently of query execution. The main backend process enqueues read requests, and these workers interact with the Linux kernel to fetch data, which is then delivered into shared buffers, without blocking the main process.

The number of I/O workers can be configured through the new io_workers setting, and defaults to 3. These workers are always running, and shared across all connections and databases.

io_method = io_uring

This Linux-specific method uses io_uring, a high-performance I/O interface introduced in kernel version 5.1. Asynchronous I/O has been available in Linux since kernel version 2.5, but it was largely considered inefficient and hard to use. io_uring establishes a shared ring buffer between Postgres and the kernel, minimizing syscall overhead. This is the most efficient option, eliminating the need for I/O worker processes entirely, but is only available on newer Linux kernels and requires file systems and configurations compatible with io_uring support.

Important note: As of the Postgres 18 Beta 1, asynchronous I/O is supported for sequential scans, bitmap heap scans, and maintenance operations like VACUUM.

Asynchronous I/O in action

Asynchronous I/O delivers the most noticeable gains in cloud environments where storage is network-attached, such as Amazon EBS volumes. In these setups, individual disk reads often take multiple milliseconds, introducing substantial latency compared to local SSDs.

With traditional synchronous I/O, each of these reads blocks query execution until the data arrives, leading to idle CPU time and degraded throughput. By contrast, asynchronous I/O allows Postgres to issue multiple read requests in parallel and continue processing while waiting for results. This reduces query latency and enables much more efficient use of available I/O bandwidth and CPU cycles.

Benchmark on AWS: Doubling read performance & even greater gains from io_uring

To evaluate the performance impact of asynchronous I/O, we benchmarked a representative workload on AWS, comparing Postgres 17 with Postgres 18 using different io_method settings. The workload remained identical across versions, allowing us to isolate the effects of the new I/O infrastructure.

We've tested on an AWS c7i.8xlarge instance (32 vCPUs, 64 GB RAM), with a dedicated 100GB io2 EBS volume for Postgres, with 20,000 provisioned IOPS. The test table was 3.5GB in size:

CREATE TABLE test(id int);

INSERT INTO test SELECT * FROM generate_series(0, 100000000);test=# \dt+

List of relations

Schema | Name | Type | Owner | Persistence | Access method | Size | Description

--------+------+-------+----------+-------------+---------------+---------+-------------

public | test | table | postgres | permanent | heap | 3458 MB |

(1 row)Between test runs we cleared the OS page cache (sync; echo 3 > /proc/sys/vm/drop_caches), and restarted Postgres, to gather cold cache results. Warm cache results represent running the query a second time. We repeated the complete test run for each configuration multiple times, retaining the best result out of three.

Whilst we also tested with parallel query, to keep results easier to understand all results below are with parallel query turned off (max_parallel_workers_per_gather = 0).

Cold cache results:

Postgres 17, using synchronous I/O, established the baseline. It showed consistent read latency, but throughput was limited by the need to complete each I/O request before issuing the next:

test=# SELECT COUNT(*) FROM test;

count

-----------

100000001

(1 row)

Time: 15830.880 ms (00:15.831)Postgres 18, when configured with io_method = sync, performed nearly identically, confirming that behavior remains unchanged without enabling asynchronous I/O:

test=# SELECT COUNT(*) FROM test;

count

-----------

100000001

(1 row)

Time: 15071.089 ms (00:15.071)However, when we switch to using the worker method, with 3 I/O workers (the default) a clear improvement shows:

test=# SELECT COUNT(*) FROM test;

count

-----------

100000001

(1 row)

Time: 10051.975 ms (00:10.052)We observed some gains by raising the number of I/O workers, but the biggested improvement comes when utilizing io_uring:

test=# SELECT COUNT(*) FROM test;

count

-----------

100000001

(1 row)

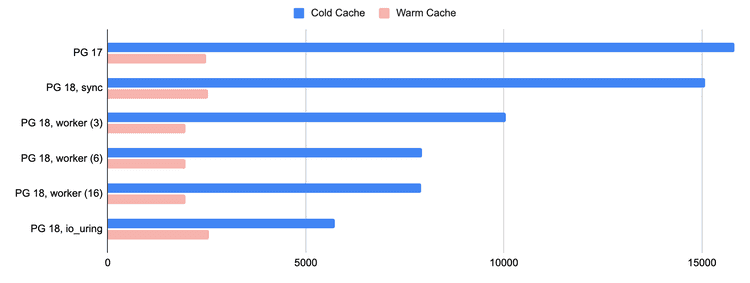

Time: 5723.423 ms (00:05.723)When we graph this (measuring runtime in ms, lower is better), it’s clear that Postgres 18 performs significantly better in cold cache situations:

For cold cache tests, both worker and io_uring delivered a consistent 2-3x improvement in read performance compared to the legacy sync method.

Whilst worker offers a slight benefit for warm cache tests due to its parallelism, io_uring consistently performed better in cold cache tests, and its lower syscall overhead and reduced process coordination would make io_uring the recommended setting for maximizing I/O performance in Postgres 18.

This performance shift for disk reads has meaningful implications for infrastructure planning, especially in cloud environments. By reducing I/O wait time, asynchronous reads can substantially increase query throughput, reduce latency and CPU overhead. For read-heavy workloads, this may translate into smaller instance sizes or better utilization of existing resources.

Tuning effective_io_concurrency

In Postgres 18, effective_io_concurrency becomes more interesting, but only when used with an asynchronous io_method such as worker or io_uring. Previously, this setting merely advised the OS to prefetch data using posix_fadvise. Now, it directly controls how many asynchronous read-ahead requests Postgres issues internally.

The number of blocks read ahead is influenced by both effective_io_concurrency and io_combine_limit, following the general formula:

maximum read-ahead = effective_io_concurrency × io_combine_limitThis gives DBAs and engineers greater control over I/O behavior. The optimal value requires benchmarking, as it depends on your I/O subsystem. For example, higher values may benefit cloud environments with high latency that also support high concurrency, like AWS EBS with high provisioned IOPS.

When doing our benchmarks, we also tested higher effective_io_concurrency (between 16 and 128) but did not see a meaningful difference. However, that is likely due to the simple test query used.

It’s worth noting that the previous default of effective_io_concurrency was 1 in Postgres 17, which is now raised to 16, based on benchmarks done by the Postgres community.

Monitoring I/Os in flight with pg_aios

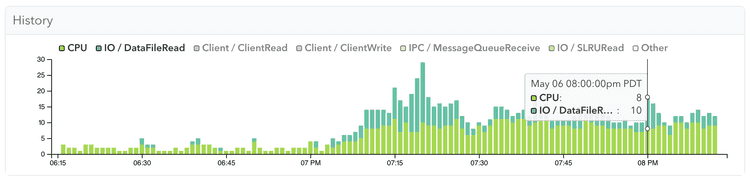

As mentioned, previous versions of Postgres with synchronous I/O made it easy to spot read delays: the backend process would block while waiting for disk access, and monitoring tools like pganalyze can reliably surface IO / DataFileRead as a wait event during these stalls.

For example, here we can see wait events clearly in Postgres 17 synchronous I/O.

With asynchronous I/O in Postgres 18, backend wait behavior changes. When using io_method = worker, the backend process delegates reads to a separate I/O worker. As a result, the backend may appear idle or show the new IO / AioIoCompletion wait event, while the I/O worker shows the actual I/O wait events:

SELECT backend_type, query, state, wait_event_type, wait_event

FROM pg_stat_activity

WHERE backend_type = 'client backend' OR backend_type = 'io worker'; backend_type | state | wait_event_type | wait_event

----------------+--------+-----------------+-----------------

client backend | active | IO | AioIoCompletion

io worker | | IO | DataFileRead

io worker | | IO | DataFileRead

io worker | | IO | DataFileRead

(4 rows)With io_method = io_uring, read operations are submitted directly to the kernel and completed asynchronously. The backend does not block on a traditional I/O syscall, so this activity is not visible from the Postgres side, even though I/O is in progress.

To help with debugging of I/O requests in flight, the new pg_aios view can show Postgres internal state, even when using io_uring:

SELECT * FROM pg_aios; pid | io_id | io_generation | state | operation | off | length | target | handle_data_len | raw_result | result | target_desc | f_sync | f_localmem | f_buffered

-------+-------+---------------+--------------+-----------+-----------+--------+--------+-----------------+------------+---------+--------------------------------------------------+--------+------------+------------

91452 | 1 | 4781 | SUBMITTED | read | 996278272 | 131072 | smgr | 16 | | UNKNOWN | blocks 383760..383775 in file "base/16384/16389" | f | f | t

91452 | 2 | 4785 | SUBMITTED | read | 996147200 | 131072 | smgr | 16 | | UNKNOWN | blocks 383744..383759 in file "base/16384/16389" | f | f | t

91452 | 3 | 4796 | SUBMITTED | read | 996409344 | 131072 | smgr | 16 | | UNKNOWN | blocks 383776..383791 in file "base/16384/16389" | f | f | t

91452 | 4 | 4802 | SUBMITTED | read | 996016128 | 131072 | smgr | 16 | | UNKNOWN | blocks 383728..383743 in file "base/16384/16389" | f | f | t

91452 | 5 | 3175 | COMPLETED_IO | read | 995885056 | 131072 | smgr | 16 | 131072 | UNKNOWN | blocks 383712..383727 in file "base/16384/16389" | f | f | t

(5 rows)Understanding these behavior changes and understanding the impact of asynchronous execution is essential when optimizing I/O performance in Postgres 18.

Heads Up: Async I/O makes I/O timing information hard to interpret

Asynchronous I/O introduces a shift in how execution timing is reported. When the backend no longer blocks directly on disk reads (as is the case with worker or io_uring) the complete time spent doing I/O may not be reflected in EXPLAIN ANALYZE output. This can make I/O-bound queries seem to require less I/O effort than previously.

First, let's run the earlier query in EXPLAIN ANALYZE on a cold cache in Postgres 17:

test=# EXPLAIN (ANALYZE, BUFFERS, TIMING OFF) SELECT COUNT(*) FROM test;

QUERY PLAN

--------------------------------------------------------------------------------------------------------

Aggregate (cost=1692478.40..1692478.41 rows=1 width=8) (actual rows=1 loops=1)

Buffers: shared read=442478

I/O Timings: shared read=14779.316

-> Seq Scan on test (cost=0.00..1442478.32 rows=100000032 width=0) (actual rows=100000001 loops=1)

Buffers: shared read=442478

I/O Timings: shared read=14779.316

Planning:

Buffers: shared hit=13 read=6

I/O Timings: shared read=3.182

Planning Time: 8.136 ms

Execution Time: 18006.405 ms

(11 rows)We've read 442,478 buffers in 14.8 seconds.

And now, we repeat the test on Postgres 18 with the default settings (io_method = worker):

test=# EXPLAIN (ANALYZE, BUFFERS, TIMING OFF) SELECT COUNT(*) FROM test;

QUERY PLAN

-----------------------------------------------------------------------------------------------------------

Aggregate (cost=1692478.40..1692478.41 rows=1 width=8) (actual rows=1.00 loops=1)

Buffers: shared read=442478

I/O Timings: shared read=7218.835

-> Seq Scan on test (cost=0.00..1442478.32 rows=100000032 width=0) (actual rows=100000001.00 loops=1)

Buffers: shared read=442478

I/O Timings: shared read=7218.835

Planning:

Buffers: shared hit=13 read=6

I/O Timings: shared read=2.709

Planning Time: 2.925 ms

Execution Time: 10480.827 ms

(11 rows)We've read 442,478 buffers in 7.2 seconds.

Whilst with parallel query we get a summary of all the I/O time across all parallel workers, no such summarization occurs with I/O workers. What we are seeing is the wait time for the I/O to be completed, ignoring any parallelism that may happen behind the scenes.

This is technically not a behaviour change, since even in Postgres 17 the time reported was the time spent waiting on I/Os, not the time spent performing the I/O, e.g. Kernel I/O time for readahead was never accounted for.

Historically I/O timing was often equated with I/O effort, instead of just looking at shared buffer read counts, in order to distinguish from a OS page cache hit. Now, in Postgres 18, interpreting I/O timing requires more caution: asynchronous I/O can hide I/O overhead in query plans.

Conclusion

To summarize, the upcoming release of Postgres 18 marks the beginning of a major evolution in how I/O is handled. While currently limited to reads, asynchronous I/O already opens the door to significant performance improvements in high-latency cloud environments.

But some of these gains come with tradeoffs. Engineering teams will need to adjust their observability practices, learn new semantics for timing and wait events, and perhaps revisit tuning parameters with previously limited impact, like effective_io_conurrency.

In summary

- Asynchronous I/O support in Postgres 18 introduces

worker(as the default) andio_uringoptions under the newio_methodsetting. - Benchmarks show up to a 2-3x throughput improvement for read-heavy workloads in cloud environments.

- Observability practices need to evolve:

EXPLAIN ANALYZEmay underreport I/O effort, and new views likepg_aioswill help provide insights. - Tools like pganalyze will be adapting to these changes to continue surfacing relevant performance insights.

As Postgres development continues, future versions (19 and beyond) may bring asynchronous write support, further reducing I/O bottlenecks in modern workloads, and enabling production use of Direct I/O.

References

- PostgreSQL

io_methodGUC (Postgres 18) - PostgreSQL

effective_io_concurrency - PostgreSQL Shared Buffers and Buffer Management

pg_stat_activityViewpg_stat_ioViewpg_aiosView (New in Postgres 18)posix_fadvise()System Call- Linux io_uring Man Page

- 5mins of Postgres: Waiting for Postgres 17: Streaming I/O for sequential scans & ANALYZE