Full Text Search in Milliseconds with Rails and PostgreSQL

Imagine the following scenario: You have a database full of job titles and descriptions, and you’re trying to find the best match. Typically you’d start by using an ILIKE expression, but this requires the search phrase to be an exact match. Then you might use trigrams, allowing spelling mistakes and inexact matches based on word similarity, but this makes it difficult to search using multiple words. What you really want to use is Full Text Search, providing the benefits of ILIKE and trigrams, with the added ability to easily search through large documents using natural language.

To summarize, here is a quick overview of popular built-in Postgres search options:

| Postgres Feature | Typical Use Case | Can be indexed? | Performance |

|---|---|---|---|

| LIKE/ILIKE | Wildcard-style search for small data | Sometimes | Unpredictable |

| pg_trgm | Similarity search for names, etc | Yes (GIN/GIST) | Good |

| Full Text Search | Natural language search | Yes (GIN/GIST) | Good |

In this article, we are going to learn about the inner workings of Full Text Search in Postgres and how to easily integrate Full Text Search into your Rails application using a fantastic gem named pg_search. We will learn how to search multiple columns at once, to give one column precedence over another, and how to optimize our Full Text Search implementation, taking a single query from 130ms to 7ms.

The full source code used in this article can be found here. Instructions on how to run this application locally and how to load the sample data referenced within this article can be found in the README.

If you are interested in efficient Full Text Search in Postgres with Django, you can read our article about it.

The Foundations of Full Text Search

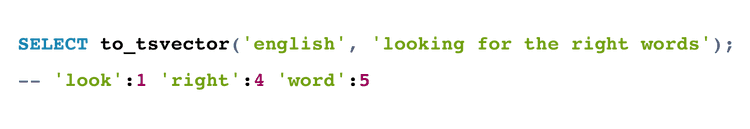

Let's break down the basics of Full Text Search, defining and explaining some of the most common terms you'll run into. Taking the text “looking for the right words”, we can see how Postgres stores this data internally, using the to_tsvector function:

SELECT to_tsvector('english', 'looking for the right words');

-- 'look':1 'right':4 'word':5In the above SQL we have some text; often referred to as a document when talking about Full Text Search. A document must be parsed and converted into a special data type called a tsvector, which we did using the function to_tsvector.

The tsvector data type is comprised of lexemes. Lexemes are normalized key words which were contained in the document that will be used when searching through it. In this case we used the english language dictionary to normalize the words, breaking them down to their root. This means that words became word, and looking became look, with very common words such as for and the being removed completely, to avoid false positives.

SELECT to_tsvector('english', 'looking for the right words') @@ to_tsquery('english', 'words');

-- TRUEThe @@ operator allows us to check if a query (data type tsquery) exists within a document (data type tsvector). Much like tsvector, tsquery is also normalized prior to searching the document for matches.

SELECT

ts_rank(

to_tsvector('looking for the right words'),

to_tsquery('english', 'words')

);

-- 0.06079271The ts_rank function takes a tsvector and a tsquery, returning a number that can be used when sorting the matching records, allowing us to sort the results from highest to lowest ranking.

Now that you have seen a few examples, let’s have a look at one last one before getting to Rails. Following, you can see an example of a query which searches through the jobs table where we are storing the title and description of each job. Here we are searching for the words ruby and rails, grabbing the 3 highest ranking results.

SELECT

id,

title,

ts_rank(

to_tsvector('english', title) || to_tsvector('english', description),

to_tsquery('english', 'ruby & rails')

) AS rank

FROM jobs

WHERE

to_tsvector('english', title) || to_tsvector('english', description) @@

to_tsquery('english', 'ruby & rails')

ORDER BY rank DESC

LIMIT 3The highest ranking result is a job with the title "Ruby on Rails Developer"... perfect! The full results of this query are:

[

{

"id": 1,

"title": "Ruby on Rails Developer",

"rank": 0.40266925

},

{

"id": 109,

"title": "Senior Ruby Developer - Remote",

"rank": 0.26552397

},

{

"id": 151,

"title": "Team-Lead Developer",

"rank": 0.14533159

}

]This query is actually concatenating (using ||) two tsvector fields together. This allows us to search both the title and the description at the same time. Later, we'll see how to give additional weight (precedence) to the title column.

Implementing Postgres Full Text Search in Rails

With a basic understanding of Full Text Search under our belts, it's time to take our knowledge over to Rails. We will be using the pg_search Gem, which can be used in two ways:

-

Multi Search: Search across multiple models and return a single array of results. Imagine having three models: Product, Brand, and Review. Using Multi Search we could search across all of them at the same time, seeing a single set of search results. This would be perfect for adding federated search functionality to your app.

-

Search Scope: Search within a single model, but with greater flexibility.

We will be focusing on the Search Scope approach in this article, as it lets us dive into the configuration options available when working with Full Text Search in Rails. Let's add the Gem to our Gemfile and get started:

# Gemfile

gem 'pg_search', '~> 2.3', '>= 2.3.2'With that done, we can include a module in our Job model, and define our first searchable field:

class Job < ApplicationRecord

include PgSearch::Model

pg_search_scope :search_title, against: :title

endThis adds a class level method to Job, allowing us to find jobs with the following line, which automatically returns them ranked from best match to worst.

Job.search_title('Ruby on Rails')If we were to append to_sql to the above Ruby statement, we can see the SQL that is being generated. I have to warn you, it’s a bit messy, but that is because it handles not only searching, but also putting the results in the correct order using the ts_rank function.

SELECT

"jobs".*

FROM

"jobs"

INNER JOIN (

SELECT

"jobs"."id" AS pg_search_id,

(ts_rank((to_tsvector('simple', coalesce("jobs"."title"::text, ''))), (to_tsquery('simple', ''' ' || 'Ruby' || ' ''') && to_tsquery('simple', ''' ' || 'on' || ' ''') && to_tsquery('simple', ''' ' || 'Rails' || ' ''')), 0)) AS rank

FROM

"jobs"

WHERE ((to_tsvector('simple', coalesce("jobs"."title"::text, ''))) @@ (to_tsquery('simple', ''' ' || 'Ruby' || ' ''') && to_tsquery('simple', ''' ' || 'on' || ' ''') && to_tsquery('simple', ''' ' || 'Rails' || ' ''')))) AS pg_search_5d9a17cb70b9733aadc073 ON "jobs"."id" = pg_search_5d9a17cb70b9733aadc073.pg_search_id

ORDER BY

pg_search_5d9a17cb70b9733aadc073.rank DESC,

"jobs"."id" ASCConfiguring pg_search

There are a number of ways you can configure pg_search: From support for prefixes and negation, to specifying which language dictionary to use when normalizing the document, as well as adding multiple, weighted columns.

By default pg_search uses the simple dictionary, which does zero normalization, but if we wanted to normalize our document using the english dictionary, searching across both the title and description, it would look like:

class Job < ApplicationRecord

include PgSearch::Model

pg_search_scope :search_job,

against: %i[title description],

using: { tsearch: { dictionary: 'english' } }

endWe can perform a search in the same way we did before: Job.search_job("Ruby on Rails"). If we wanted to give higher precedence to the title column, we can add weighting scores to each of the columns, with possible values of: A, B, C, D.

class Job < ApplicationRecord

include PgSearch::Model

pg_search_scope :search_job,

against: { title: 'A', description: 'B' },

using: { tsearch: { dictionary: 'english' } }

endWhen you start combining columns, weighting them, and choosing which dictionary provides the best results, it really comes down to trial and error. Play around with it, try some queries and see if the results you get back match with what you are expecting!

Optimizing Full Text Search Queries in Rails

We have a problem! The query that is produced by Job.search_job("Ruby on Rails") takes an astounding 130ms. That may not seem like such a large number, but it is astounding because there are only 145 records in my database. Imagine if there were thousands! The majority of time is spent in the to_tsvector function. We can verify this by running this streamlined query below, which takes almost as much time to execute as the full query which actually finds the matching jobs:

SELECT to_tsvector('english', description) FROM jobs;

-- ~130ms

SELECT description FROM jobs;

-- ~15msThis tells me that the slowness is in re-parsing and normalizing the document into a tsvector data type every single time the query is executed. The folks at thoughtbot have a great article about Full Text Search optimizations, where they add a pre-calculated tsvector column, keeping it up-to-date with triggers. This is great because it allows us to avoid re-parsing our document for every query and also lets us index this column!

There is a similar but slightly different approach I want to cover today which I learned by reading through the Postgres documentation. It also involves adding a pre-calculated tsvector column, but is done using a stored generated column. This means we don't need any triggers! It should be noted that this approach is only available in Postgres 12 and above. If you are using version 11 or earlier, the approach in the thoughtbot article is probably still the best one.

As we are venturing into the territory of more custom Postgres functionality, not easily supported by the Rails schema file in Ruby, we'll want to switch the schema format from :ruby to :sql. This line can be added to the application.rb file:

config.active_record.schema_format = :sqlNow, let's generate a migration to add a new column to the jobs table which will be automatically generated based on the setweight and to_tsvector functions:

class AddSearchableColumnToJobs < ActiveRecord::Migration[6.0]

def up

execute <<-SQL

ALTER TABLE jobs

ADD COLUMN searchable tsvector GENERATED ALWAYS AS (

setweight(to_tsvector('english', coalesce(title, '')), 'A') ||

setweight(to_tsvector('english', coalesce(description,'')), 'B')

) STORED;

SQL

end

def down

remove_column :jobs, :searchable

end

endNote that, as of the writing of this article, Postgres always requires a generated column to be a “Stored” column. That means it actually occupies space in your table and gets written on each INSERT/UPDATE. This also means that when you add a generated column to a table, it will require a rewrite of the table to actually set the values for all existing rows. This may block other operations on your database.

With our tsvector column added (which is giving precedence to the title over the description, is using the english dictionary, and is coalescing null values into empty strings), we're ready to add an index to it. Either GIN or GiST indexes can be used to speed up full text searches, but Postgres recommends GIN as the preferred index due to GiST searches being lossy, which may produce false matches. We'll add it concurrently to avoid locking issues when adding an index to large tables.

class AddIndexToSearchableJobs < ActiveRecord::Migration[6.0]

disable_ddl_transaction!

def change

add_index :jobs, :searchable, using: :gin, algorithm: :concurrently

end

endThe last thing we need to do is to tell pg_search to use our tsvector searchable column, rather than re-parsing the title and description fields each time. This is done by adding the tsvector_column option to tsearch:

class Job < ApplicationRecord

include PgSearch::Model

pg_search_scope :search_job,

against: { title: 'A', description: 'B' },

using: {

tsearch: {

dictionary: 'english', tsvector_column: 'searchable'

}

}

endWith this optimization done, we have gone from around 130ms to 7ms per query... not bad at all!

Conclusion

Let’s have a look at a real-life data set. We can prove the precision of our approach by looking at my database: Out of 145 jobs pulled from the GitHub and Hacker News job APIs, searching for "Ruby on Rails" returns the following results:

[

"Ruby on Rails Developer",

"Senior Ruby Developer - Remote",

"Gobble (YC W14) – Senior Full Stack Software Engineers – Toronto, On",

"DevOps (Remote - Europe)",

"CareRev (YC S16) Is Hiring a Senior Back End Engineer in Los Angeles",

"Software Engineer, Full Stack (Rails, React)",

"Software Engineer",

"Technology Solutions Developer"

]To summarize:

We have shown how to use Postgres' Full Text Search within Rails and also how to customize it both in terms of functionality, but also in terms of performance. We ended up with a performant and flexible solution right inside the database we were already using.

Many use cases for Full Text Search can be implemented directly inside Postgres, avoiding the need to install and maintain additional services such as Elasticsearch.

If you find this article useful and want to share it with your peers you can tweet about it here.

You might also be interested in

Learn more about how to make the most of Postgres and Ruby on Rails:

- eBook: Best Practices for Optimizing Postgres Query Performance

- Effectively Using Materialized Views in Ruby on Rails

- Efficient GraphQL queries in Ruby on Rails & Postgres

About the author

Leigh Halliday is a guest author for the pganalyze blog. He is a developer based out of Canada who works at FlipGive as a full-stack developer. He writes about Ruby and React on his blog and publishes React tutorials on YouTube.