Postgres Log Monitoring 101: Deadlocks, Checkpoint Tuning & Blocked Queries

Those of us who operate production PostgreSQL databases have many jobs to do - and often there isn't enough time to take a regular look at the Postgres log files.

However, often times those logs contain critical details on how new application code is affecting the database due to locking issues, or how certain configuration parameters cause the database to produce I/O spikes.

This post highlights three common performance problems you can find by looking at, and automatically filtering your Postgres logs.

Blocked Queries

One of the most performance-related log events are blocked queries, due to waiting for locks that another query has taken. On systems that have problems with locks you will often also see very high CPU utilization that can't be explained.

First, in order to enable logging of lock waits, set log_lock_waits = on in your Postgres config. This will emit a log event like the following if a query has been waiting for longer than deadlock_timeout (default 1s):

LOG: process 123 still waiting for ShareLock on transaction 12345678 after 1000.606 ms

STATEMENT: SELECT table WHERE id = 1 FOR UPDATE;

CONTEXT: while updating tuple (1,3) in relation “table”

DETAIL: Process holding the lock: 456. Wait queue: 123.This tells us that we're seeing lock contention on updates for table, as another transaction holds a lock on the same row we're trying to update. You can often see this caused by complex transactions that hold locks for too long. One frequent anti-pattern in a typical web app is to:

- Open a transaction

- Update a timestamp field (e.g.

updated_atin Ruby on Rails) - Make an API call to an external service

- Commit the transaction

The lock on the row that you updated in Step 2 will be held all the way to 4., which means if the API call takes a few seconds total, you will be holding a lock on that row for that time. If you have any concurrency in your system that affects the same rows, you will see lock contention, and the above lock notice for the queries in Step 2.

Often you however have to go back to a development or staging system with full query logging, to understand the full context of a transaction thats causing the problem.

Deadlocks

Related to blocked queries, but slightly different, are deadlocks, which result in a cancelled query due to it deadlocking against another query.

The easiest way to reproduce a deadlock is doing the following:

--- session 1

BEGIN;

SELECT * FROM table WHERE id = 1 FOR UPDATE;

--- session 2

BEGIN;

SELECT * FROM table WHERE id = 2 FOR UPDATE;

SELECT * FROM table WHERE id = 1 FOR UPDATE; --- this will block waiting for session 1 to finish

--- session 1

SELECT * FROM table WHERE id = 2 FOR UPDATE; --- this can never finish as it deadlocks against session 2Again after deadlock_timeout Postgres will see the locking problem. In this case it decides that this will never finish, and emit the following to the logs:

2018-02-12 09:24:52.176 UTC [3098] ERROR: deadlock detected

2018-02-12 09:24:52.176 UTC [3098] DETAIL: Process 3098 waits for ShareLock on transaction 219201; blocked by process 3099.

Process 3099 waits for ShareLock on transaction 219200; blocked by process 3098.

Process 3098: SELECT * FROM table WHERE id = 2 FOR UPDATE;

Process 3099: SELECT * FROM table WHERE id = 1 FOR UPDATE;

2018-02-12 09:24:52.176 UTC [3098] HINT: See server log for query details.

2018-02-12 09:24:52.176 UTC [3098] CONTEXT: while locking tuple (0,1) in relation "table"

2018-02-12 09:24:52.176 UTC [3098] STATEMENT: SELECT * FROM table WHERE id = 2 FOR UPDATE;You might think that deadlocks never happen in production, but the unfortunate truth is that heavy use of ORM frameworks can hide the circular dependency situation that produces deadlocks, and its certainly something to watch out for when you make use of complex transactions.

Checkpoints

Last but not least, checkpoints. For those unfamiliar, checkpointing is the mechanism by which PostgreSQL persists all changes to the data directory, which before were only in shared buffers and the WAL. Its what gives you a consistent copy of your data in one place (the data directory).

Due to the fact that checkpoints have to write out all the changes you've submitted to the database (which before were already written to the WAL), they can produce quite a lot of I/O - in particular when you are actively loading data.

The easiest way to produce a checkpoint is to call CHECKPOINT, but very few people would do that frequently in production. Instead Postgres has a mechanism that automatically triggers a checkpoint, most commonly due to either time, or xlog. After turning on log_checkpoints = 1 you can see this in the logs like this:

Feb 09 08:30:07am PST 12772 LOG: checkpoint starting: time

Feb 09 08:15:50am PST 12772 LOG: checkpoint starting: xlog

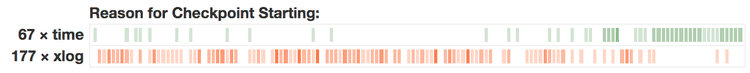

Feb 09 08:10:39am PST 12772 LOG: checkpoint starting: xlogOr when visualized over time, it can look like this:

Occasionally Postgres will also output the following warning, which hints at the tuning you can do:

Feb 09 10:21:11am PST 5677 LOG: checkpoints are occurring too frequently (17 seconds apart)With checkpoints you want to avoid having them occur to frequently, as each checkpoint will produce significant I/O, as well as cause all changes that are written to WAL right after to be written as a full-page write.

Ideally you would see checkpoints spaced out evenly and usually started by time instead of xlog. You can influence this behavior by the following config settings:

checkpoint_timeout- the time after which atimecheckpoint will be kicked off (defaults to every 5 minutes)max_wal_size- the maximum amount of WAL that will be accumulated before anxlogcheckpoint gets triggered (defaults to 1 GB)checkpoint_completion_target- how quickly a checkpoint finishes (defaults to0.5which means it will finish in half the time ofcheckpoint_timeout, i.e. 2.5 minutes)

On many production systems I've seen max_wal_size be increased to support higher write rates, checkpoint_timeout to be slightly increased as well to avoid too frequent time-based checkpoints, as well as setting checkpoint_completion_target to 0.9.

You should however tune all of this based on your own system, and the logs, so you can choose whats correct for your setup. Also note that less frequent checkpoints mean recovery of the server is going to take longer, as Postgres will have to replay all WAL, starting from the previous checkpoint, when booting after a crash.

Conclusion

Postgres log files contain a treasure of useful data you can analyze in order to make your system behave faster, as well as debug production issues. This data is readily available, but often difficult to parse.

This article tries to point the way towards which log lines are worth filtering for on production systems.

If you don't want to bother with setting up your own filters in a third party logging system, try out pganalyze Postgres Log Insights: a real-time PostgreSQL log analysis and log monitoring system built into pganalyze.