Postgres 11: Monitoring JIT performance, Auto Prewarm & Stored Procedures

Everyone’s favorite database, PostgreSQL, has a new release coming out soon: Postgres 11

In this post we take a look at some of the new features that are part of the release, and in particular review the things you may need to monitor, or can utilize to increase your application and query performance.

Just-In-Time compilation (JIT) in Postgres 11

Just-In-Time compilation (JIT) for query execution was added in Postgres 11. It's not going to be enabled for queries by default, similar to parallel query in Postgres 9.6, but can be very helpful for CPU-bound workloads and analytical queries.

Specifically, JIT currently aims to optimize two essential parts of query execution: Expression evaluation and tuple deforming. To quote the Postgres documentation:

Expression evaluation is used to evaluate WHERE clauses, target lists, aggregates and projections. It can be accelerated by generating code specific to each case.

Tuple deforming is the process of transforming an on-disk tuple into its in-memory representation. It can be accelerated by creating a function specific to the table layout and the number of columns to be extracted.

Often you will have a workload that is mixed, where some queries will benefit from JIT, and some will be slowed down by the overhead.

Here is how you can monitor JIT performance using EXPLAIN and auto_explain, as well as how you can determine whether your queries are benefiting from JIT optimization.

Monitoring JIT with EXPLAIN / auto_explain

First of all, you will need to make sure that your Postgres packages are compiled with JIT support (--with-llvm configuration switch). Assuming that you have Postgres binaries compiled like that, the jit configuration parameter controls whether JIT is actually being used.

For this example, we’re working with one of our staging databases, and pick a relatively simple query that can benefit from JIT:

SELECT COUNT(*) FROM log_lines

WHERE log_classification = 65 AND (details->>'new_dead_tuples')::integer >= 0;For context, the table log_lines is an internal log event statistics table of pganalyze, which is typically indexed per-server, but in this case we want to run an analytical query across all servers to count interesting autovacuum completed log events.

First, if we run the query with jit = off, we will get an execution plan and runtime like this:

EXPLAIN (ANALYZE, BUFFERS) SELECT COUNT(*) FROM log_lines

WHERE log_classification = 65 AND (details->>'new_dead_tuples')::integer >= 0;┌──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┐

│ QUERY PLAN │

├──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┤

│ Aggregate (cost=649724.03..649724.04 rows=1 width=8) (actual time=3498.939..3498.939 rows=1 loops=1) │

│ Buffers: shared hit=1538 read=386328 │

│ I/O Timings: read=1098.036 │

│ -> Seq Scan on log_lines (cost=0.00..649675.55 rows=19393 width=0) (actual time=0.028..3437.032 rows=667063 loops=1) │

│ Filter: ((log_classification = 65) AND (((details ->> 'new_dead_tuples'::text))::integer >= 0)) │

│ Rows Removed by Filter: 14396065 │

│ Buffers: shared hit=1538 read=386328 │

│ I/O Timings: read=1098.036 │

│ Planning Time: 0.095 ms │

│ Execution Time: 3499.089 ms │

└──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┘

(10 rows)

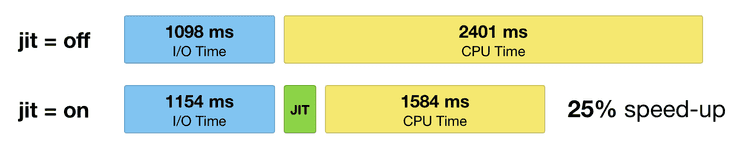

Time: 3499.580 ms (00:03.500)Note the usage of EXPLAIN's BUFFERS option so we can compare whether any caching behavior affects our benchmarking. We can also see that I/O time was 1,098 ms out of 3,499 ms, so this query is definitely CPU bound.

For comparison, when we enable JIT, we can see the following:

SET jit = on;

EXPLAIN (ANALYZE, BUFFERS) SELECT COUNT(*) FROM log_lines

WHERE log_classification = 65 AND (details->>'new_dead_tuples')::integer >= 0;┌───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┐

│ QUERY PLAN │

├───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┤

│ Aggregate (cost=649724.03..649724.04 rows=1 width=8) (actual time=2816.497..2816.498 rows=1 loops=1) │

│ Buffers: shared hit=1570 read=386296 │

│ I/O Timings: read=1154.438 │

│ -> Seq Scan on log_lines (cost=0.00..649675.55 rows=19393 width=0) (actual time=78.912..2759.717 rows=667063 loops=1) │

│ Filter: ((log_classification = 65) AND (((details ->> 'new_dead_tuples'::text))::integer >= 0)) │

│ Rows Removed by Filter: 14396065 │

│ Buffers: shared hit=1570 read=386296 │

│ I/O Timings: read=1154.438 │

│ Planning Time: 0.095 ms │

│ JIT: │

│ Functions: 4 │

│ Options: Inlining true, Optimization true, Expressions true, Deforming true │

│ Timing: Generation 1.044 ms, Inlining 14.205 ms, Optimization 46.678 ms, Emission 17.868 ms, Total 79.795 ms │

│ Execution Time: 2817.713 ms │

└───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┘

(14 rows)

Time: 2818.250 ms (00:02.818)In this case, JIT yields about a 25% speed-up, due to spending less CPU time, without any extra effort on our end. We can also see that JIT tasks themselves added 79 ms to the runtime.

You can fine tune whether JIT is used for a particular query by the jit_above_cost parameter which applies to the total cost of the query as determined by the Postgres planner. The cost is 649724 in the above EXPLAIN output, which exceeds the default jit_above_cost threshold of 100000. In a future post we'll walk through more examples of when using JIT can be beneficial.

You can gather these JIT statistics either for individual queries that you are interested in (using EXPLAIN), or automatically collect it for all of your queries using the auto_explain extension. If you want to learn more about how to enable auto_explain we recommend reviewing our guide about it: pganalyze Log Insights - Tuning Log Config Settings.

Fun fact: As part of the writing of this article we ran experiments with JIT and auto_explain, and discovered that JIT information wasn’t included with auto_explain, but only with regular EXPLAINs. Luckily, we were able to contribute a bug fix to Postgres, which has been merged and will be part of the Postgres 11 release.

Preventing cold caches: Auto prewarm in Postgres 11

A neat feature that will help you improve performance right after restarting Postgres, is the new autoprewarm background worker functionality.

If you are not familiar with pg_prewarm, its an extension thats bundled with Postgres (much like pg_stat_statements), that you can use to preload data that’s on disk into the Postgres buffer cache.

It is often very useful to ensure that a certain table is cached before the first production query hits the database, to avoid an overly slow response due to data being loaded from disk.

Previously, you needed to manually specify which relations (i.e. tables) and which page offsets to preload, which was cumbersome, and hard to automate.

Caching tables with autoprewarm

Starting in Postgres 11, you can instead have this done automatically, by adding pg_prewarm to shared_preload_libraries like this:

shared_preload_libraries = 'pg_prewarm,pg_stat_statements'Doing this will automatically save information on which tables/indices are in the buffer cache (and which parts of them) every 300 seconds to a file called autoprewarm.blocks, and use that information after Postgres restarts to reload the previously cached data from disk into the buffer cache, thus improving initial query performance.

Stored procedures in Postgres 11

Postgres has had database server-side functions for a long time, with a variety of supported languages. You might have used the term “procedures” before to refer to such functions, as they are similar to what’s called “Stored Procedures” in other database systems such as Oracle.

However, one detail that is sometimes missed, is that the existing functions in Postgres were always running within the same transaction. There was no way to begin, commit, or rollback a transaction within a function, as they were not allowed to run outside of a transaction context.

Starting in Postgres 11, you will have the ability to use CREATE PROCEDURE instead of CREATE FUNCTION to create procedures.

Benefits of using stored procedures

Compared to regular functions, procedures can do more than just query or modify data: They also have the ability to begin/commit/rollback transactions within the procedure.

Particularly for those moving over from Oracle to PostgreSQL, the new procedure functionality can be a significant time saver. You can find some examples of how to convert procedures between those two relational database systems in the Postgres documentation.

How to use stored procedures

First, let’s create a simple procedure that handles some tables:

CREATE PROCEDURE my_table_task() LANGUAGE plpgsql AS $$

DECLARE

BEGIN

CREATE TABLE table_committed (id int);

COMMIT;

CREATE TABLE table_rolled_back (id int);

ROLLBACK;

END $$;We can then call this procedure like this, using the new CALL statement:

=# CALL my_table_task();

CALL

Time: 1.573 msHere you can see the benefit of procedures - despite the rollback the overall execution is successful, and the first table got created, but the second one was not since the transaction was rolled back.

Be careful: Transaction timestamps and xact_start for procedures

Expanding on how transactions work inside procedures, there is currently an oddity with the transaction timestamp, which for example you can see in xact_start. When we expand the procedure like this:

CREATE PROCEDURE my_table_task() LANGUAGE plpgsql AS $$

DECLARE

clock_str TEXT;

tx_str TEXT;

BEGIN

CREATE TABLE table_committed (id int);

SELECT clock_timestamp() INTO clock_str;

SELECT transaction_timestamp() INTO tx_str;

RAISE NOTICE 'After 1st CREATE TABLE: % clock, % xact', clock_str, tx_str;

PERFORM pg_sleep(5);

COMMIT;

CREATE TABLE table_rolled_back (id int);

SELECT clock_timestamp() INTO clock_str;

SELECT transaction_timestamp() INTO tx_str;

RAISE NOTICE 'After 2nd CREATE TABLE: % clock, % xact', clock_str, tx_str;

ROLLBACK;

END $$;And then call the procedure, we see the following:

=# CALL my_table_task();

NOTICE: 00000: After 1st CREATE TABLE: 2018-10-03 22:17:26 clock, 2018-10-03 22:17:26 xact

NOTICE: 00000: After 2nd CREATE TABLE: 2018-10-03 22:17:31 clock, 2018-10-03 22:17:26 xact

CALL

Time: 5022.598 msDespite there being two transactions in the procedure, the transaction start timestamp is that of when the procedure got called, not when the embedded transaction actually started.

You will see the same problem with the xact_start field in pg_stat_activity, causing monitoring scripts to potentially detect false positives for long running transactions. This issue is currently in discussion and likely to be changed before the final release.

How often does my stored procedure get called?

Now, if you want to monitor the performance of procedures, it gets a bit difficult. Whilst regular functions can be tracked using track_functions = on, there is no such facility for procedures. You can however track the execution of CALL statements using pg_stat_statements:

SELECT query, calls, total_time FROM pg_stat_statements WHERE query LIKE 'CALL%';┌────────────┬───────┬────────────┐

│ query │ calls │ total_time │

├────────────┼───────┼────────────┤

│ CALL abc() │ 4 │ 5.62299 │

└────────────┴───────┴────────────┘In addition, when you enable pg_stat_statements.track = all, queries that are called from within a procedure will be tracked, and made available in Postgres query performance monitoring tools such as pganalyze.

Conclusion

Postgres 11 is going to be the best Postgres release yet, and we are excited to put it into use.

Whilst common wisdom is to not upgrade right after a release, we encourage you to try out the new release early, help the community find bugs (just like we did!), and make sure that your performance monitoring systems are ready to handle the new features that were added.

PS: If this article was useful to you and you want to share it with your peers you can tweet it by clicking here.